分析一个由于服务扩容导致的耗时上升问题

现象

某后端服务作为入口网关,同时支持taf协议和http协议访问

taf协议访问量每日定时会变大,因此会进行定时扩容

最近一次定时扩容后,出现了大量ai告警,提示所有的http协议访问耗时都上涨了

根据历史记录才发现,原来每次定时扩容都会导致http协议访问耗时上涨,最近扩容策略有改动,扩容时间点提前了,耗时上涨时间点也提前了,所以ai检测到了异常

分析

首先是使用trace来检查到底是哪里变慢了

| span类型 | 服务 | 远端服务 | 开始时间 | 结束时间 | 耗时 |

|---|---|---|---|---|---|

| server | nginx | 2024-06-12 18:14:47.512 | 2024-06-12 18:14:47.933 | 421 ms | |

| client | nginx | gateway | 2024-06-12 18:14:47.642 | 2024-06-12 18:14:47.933 | 291 ms |

| server | gateway | 2024-06-12 18:14:47.675 | 2024-06-12 18:14:47.867536 | 192.536 ms | |

| client | gateway | 正式服务 | 2024-06-12 18:14:47.675 | 2024-06-12 18:14:47.783512 | 108.512 ms |

从nginx的client发包2024-06-12 18:14:47.642到gateway收包2024-06-12 18:14:47.675花费了33ms,ping了一下上海机房到广州机房的rtt是25ms,是符合预期的

网络看起来没什么问题,那么继续分析服务的耗时,也就是看服务的server span和client span的耗时的差值,可以看到nginx花费了421-291 = 130ms,gateway花费了192.536 - 108.512 = 84.024ms

gateway作为一个入口网关,没有任何逻辑,纯转发而已,这耗时显然是不合理的

具体分析一下gateway在server span上的event事件

| 时间 | event | 备注 |

|---|---|---|

| 2024-06-12 18:14:47.675603 | {"event":"beginHandleRequest","queue.lantency":"0"} | 背景:网络线程解包完毕后作为生产者放入队列让处理线程消费 这里是处理线程从队列取出包的时间,以及队列长度0 |

| 2024-06-12 18:14:47.675605 | {"event":"message","message.type":"RECEIVED", "messagepressed_size":"1117","message.uncompressed_size":"1117"} |

处理线程打印收到包大小 |

| 2024-06-12 18:14:47.784881 | {"event":"message","message.type":"SENT", "messagepressed_size":"741342","message.uncompressed_size":"741342"} |

处理线程处理完毕,打印准备的回包大小 |

| 2024-06-12 18:14:47.784994 | {"event":"sending Response", "JceCurrent trace time(ms)":"0","code":"2","desc":"send task queued"} |

背景:处理线程回包会write(fd)到EAGAIN,然后在网络线程把这个fd加入EPOLLOUT事件,后续的write就由网络线程继续处理啦 这里记录了第一次write完的时间 |

| 2024-06-12 18:14:47.868137 | {"event":"afterSendResponse"} | 网络线程write(fd)完全部数据包的时间 |

| 2024-06-12 18:14:47.933 | nginx的client span结束时间 | nginx收完全部数据包的时间 |

重点是最后三条记录,可以发现write一共持续了47.868137 - 47.784994 = 0.083143,也就是83ms。这里看起来网络发生了拥塞?也有可能是网络线程过载了?

而nginx收完回包的时间差值,也就是47.933 - 47.868137 = 0.064863,也就是又过了64ms,nginx才收到包,这已经远大于rtt了。这里看起来网络发生了拥塞?

由于网络线程是http协议和taf协议共用的,我初步感觉大概是网络存在问题,需要抓包来具体分析

PS:这一节列出的trace不是最初查问题的trace,而是抓到包中发现trace开启,而后查的trace,因此可以和下一节抓包对应起来

抓包

因为gateway的发包疑似阻塞(write慢),所以在gateway上进行抓包

和谐掉了具体ip,以nginx和gateway代替

1 | 18:14:47.652875 IP nginx.58708 > gateway.8080: Flags [S], seq 618454232, win 42340, options [mss 1424,sackOK,TS val 3242399172 ecr 0,nop,wscale 11], length 0 |

nginx将自己的MSS发送给gateway,建议gateway在发数据给nginx的时候,采用MSS=1424进行分段。而nginx在发数据给gateway的时候,同样会带上MSS=1460。两者在对比后,会采用小的那个值(1424)作为通信的MSS值,这个过程叫MSS协商,这个值很重要,下面会用到

1 | 18:14:47.675353 IP nginx.58708 > gateway.8080: Flags [P.], seq 1:1118, ack 1, win 21, options [nop,nop,TS val 3242399177 ecr 885958032], length 1117: HTTP: GET /xxx HTTP/1.1 |

nginx发起http的请求,get /xxx的资源,对照gateway的event中的第一个事件,处理线程从队列取出包来的时间18:14:47.675603

网络线程解包+入队列耗时47.675603 - 47.675353 = 0.00025,也就是0.25ms,这已经可以证明网络线程不存在问题了

1 | 18:14:47.785014 IP gateway.8080 > nginx.58708: Flags [P.], seq 1:2825, ack 1118, win 32, options [nop,nop,TS val 885958164 ecr 3242399177], length 2824: HTTP: HTTP/1.1 302 ok |

可以看到,gateway在发送了5个包,一共2824 * 5 = 14120 bytes以后,就卡住等nginx回ack,然后才继续发送了

双方协商的MSS是1424bytes,但是由于TCP的Timestamps会在options中占用12bytes,所以实际上发送的数据,最大为1412 bytes,乘以10正好是14120 bytes

这说明慢启动的初始拥塞窗口cwnd = 10,gateway正好卡在慢启动上了

对比一个没有经过慢启动的同样源ip和目标ip的抓包,gateway一瞬间就把需要回复的http包发完了

1 | 18:13:38.068605 IP nginx.40464 > gateway.8080: Flags [P.], seq 1699878698:1699879586, ack 85155950, win 34, options [nop,nop,TS val 811638094 ecr 4054054047], length 888: HTTP: GET /xxx HTTP/1.1 |

所以我大胆猜测:

在gateway大量扩容以后,nginx的请求量没有什么变化,导致链接变的非常稀疏,触发了客户端或者服务端的http长链接空闲超时时间,那么每次请求走短连接,要多三次握手一个rtt,以及慢启动的多个rtt耗时

验证和解决

知名开源项目中,http长链接的默认超时都是60s,把nginx的客户端超时和gateway的服务端超时都调整到5分钟后,效果如下

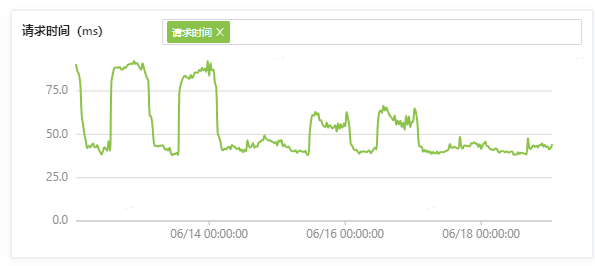

可以看到,6月14号还是起伏非常明显,经过中间几天灰度后明显好转很多,最终全量后彻底成为了一条平线

深入认识慢启动

慢启动只会发生在建连的时候吗?我大致记得发生拥塞的时候也会进行慢启动,有没有可能出现其他的触发条件?

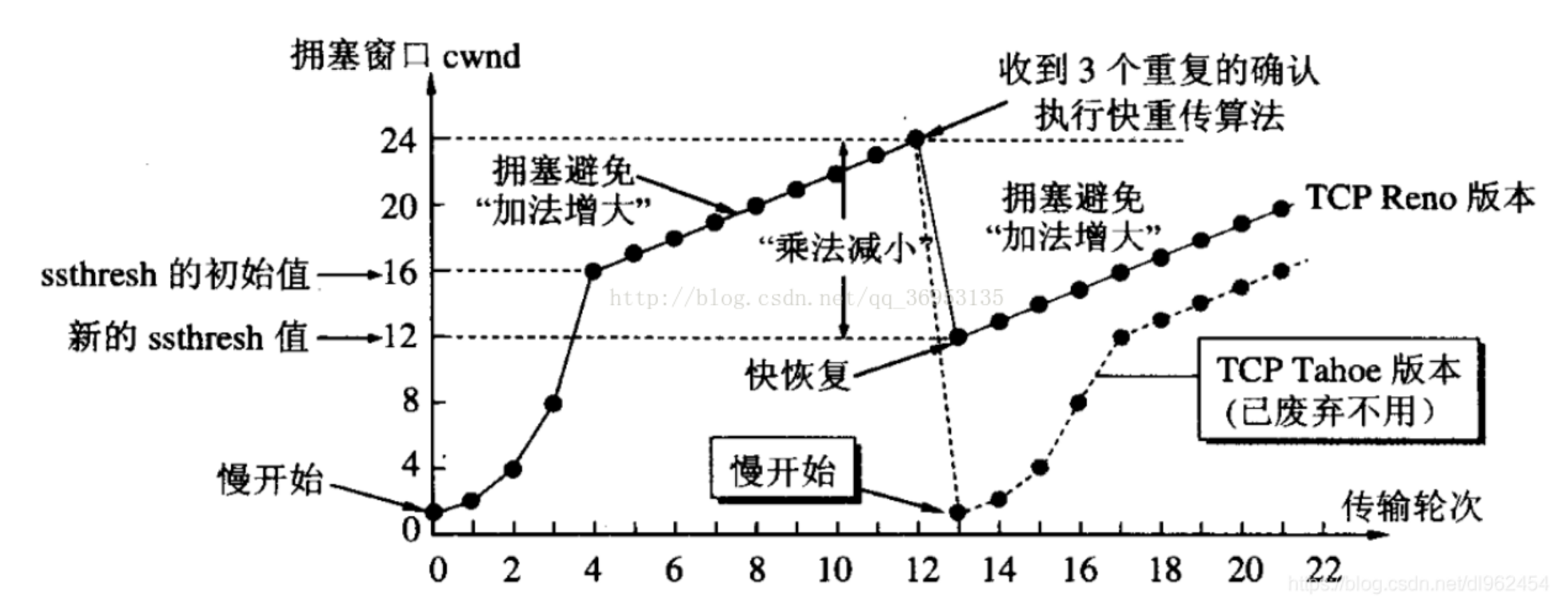

祖传TCP的慢启动

翻开tcp协议详解(卷一),第二十一章超时与重传中21.6详细叙述了慢启动细节:

拥塞避免算法和慢启动算法需要对每个连接维持两个变量:一个拥塞窗口 cwnd和一个慢启动门限ssthresh。这样得到的算法的工作过程如下:

对一个给定的连接,初始化cwnd为1个报文段,ssthresh为65535个字节

- TCP输出例程的输出不能超过 cwnd和接收方通告窗口的大小。拥塞避免是发送方使用的流量控制,而通告窗口则是接收方进行的流量控制。前者是发送方感受到的网络拥塞的估计,而后者则与接收方在该连接上的可用缓存大小有关。

- 当拥塞发生时(超时或收到重复确认),ssthresh被设置为当前窗口大小的一半(cwnd和接收方通告窗口大小的最小值,但最少为 2个报文段)。此外,如果是超时引起了拥塞,则cwnd被设置为1个报文段(这就是慢启动)。

当新的数据被对方确认时,就增加cwnd,但增加的方法依赖于我们是否正在进行慢启动或拥塞避免。如果 cwnd小于或等于ssthresh,则正在进行慢启动,否则正在进行拥塞避免。

简单来说,初始cwnd = MSS, ssthresh = 65535

发送时每收到一个ack

在cwnd <= ssthresh时,执行慢启动,

cwnd = cwnd * 2否则执行拥塞避免,

cwnd = cwnd + MSS

在这个过程中,如果出现拥塞(超时或者收到重复ACK):

设置

sshthresh = max(min(cwnd, rwnd) / 2, 2 * MSS),这里rwnd指接收方进行流量控制的通告窗口如果拥塞是超时,那么设置

cwnd = MSS

这是Tahoe在1988提出的慢启动和拥塞避免,他还提出了快速重传,随后Reno在1990提出了快速恢复算法,在21.7:

在介绍修改之前,我们认识到在收到一个失序的报文段时, TCP立即需要产生一个ACK(一个重复的ACK)。这个重复的ACK不应该被迟延。该重复的ACK的目的在于让对方知道收到一个失序的报文段,并告诉对方自己希望收到的序号。

由于我们不知道一个重复的ACK是由一个丢失的报文段引起的,还是由于仅仅出现了几个报文段的重新排序,因此我们等待少量重复的ACK到来。假如这只是一些报文段的重新排序,则在重新排序的报文段被处理并产生一个新的ACK之前,只可能产生1 ~ 2个重复的ACK。

如果一连串收到3个或3个以上的重复ACK,就非常可能是一个报文段丢失了。于是我们就重传丢失的数据报文段,而无需等待超时定时器溢出。这就是快速重传算法。接下来执行的不是慢启动算法而是拥塞避免算法。这就是快速恢复算法。

简单来说,快速重传+快速恢复收到第3个重复的ACK后:

sshthresh = max(min(cwnd, rwnd) / 2, 2 * MSS)cwnd = cwnd / 2

现代TCP的慢启动

在tcp协议详解的那个年代之后,还出现了New Reno算法,2006年CUBIC进入linux 2.6.13,近几年就几乎被google的BBR算法完全替代了

对慢启动的深入认识还是需要从RFC和实际实现进行

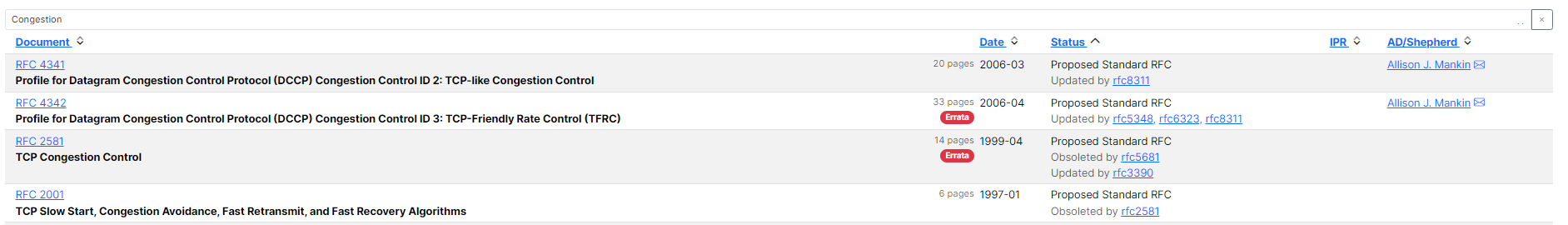

RFC

在RFC文档中搜索TCP然后再筛选Congestion,有4篇Proposed Standard RFC文档

其中RFC2001被RFC2581废弃,RFC2581被RFC5681废弃

那么直接看RFC5681即可,略去了一些初始化环节的叙述,因为初始化环节在RFC6928进行了更新,将初始化窗口提升到了min (10*MSS, max (2*MSS, 14600))

The slow start algorithm is used when cwnd < ssthresh, while the congestion avoidance algorithm is used when cwnd > ssthresh. When cwnd and ssthresh are equal, the sender may use either slow start or congestion avoidance.

慢启动算法在 cwnd < ssthresh 时使用,而拥塞避免算法在 cwnd > ssthresh 时使用。当 cwnd 和 ssthresh 相等时,发送方可以选择使用慢启动或拥塞避免。

During slow start, a TCP increments cwnd by at most SMSS bytes for each ACK received that cumulatively acknowledges new data. Slow start ends when cwnd exceeds ssthresh (or, optionally, when it reaches it, as noted above) or when congestion is observed. While traditionally TCP implementations have increased cwnd by precisely SMSS bytes upon receipt of an ACK covering new data, we RECOMMEND that TCP implementations increase cwnd, per:

在慢启动期间,TCP在接收到确认新数据的累积ACK时,最多将 cwnd 增加 SMSS 字节。慢启动结束于 cwnd 超过 ssthresh(或在达到 ssthresh 时,如上所述),或者当观察到拥堵时结束。虽然传统的TCP实现在接收到覆盖新数据的ACK时,会将cwnd 精确增加 SMSS 字节,我们推荐 TCP 实现按下述方式增加 cwnd:

cwnd += min (N, SMSS) (2) where N is the number of previously unacknowledged bytes acknowledged in the incoming ACK. This adjustment is part of Appropriate Byte Counting [RFC3465] and provides robustness against misbehaving receivers that may attempt to induce a sender to artificially inflate cwnd using a mechanism known as "ACK Division" [SCWA99]. ACK Division consists of a receiver sending multiple ACKs for a single TCP data segment, each acknowledging only a portion of its data. A TCP that increments cwnd by SMSS for each such ACK will inappropriately inflate the amount of data injected into the network.

其中 N 是接收的ACK中确认的之前未确认的字节数。这种调整是适当字节计数 [RFC3465] 的一部分,并针对可能试图通过称为“ACK 分割”[SCWA99]的机制引诱发送方人为膨胀 cwnd 的行为提供了鲁棒性。ACK 分割包括接收方发送针对单个 TCP 数据段的多个 ACK,每个 ACK 只确认部分数据。一个按每个此类 ACK 增加 SMSS 的 TCP 将不适当地增加注入网络的数据量。

During congestion avoidance, cwnd is incremented by roughly 1 full-sized segment per round-trip time (RTT). Congestion avoidance continues until congestion is detected. The basic guidelines for incrementing cwnd during congestion avoidance are:

在拥塞避免期间,cwnd 大约每往返时间(RTT)增加一个完整大小的数据段。拥堵避免持续到检测到拥堵为止。在拥堵避免期间增加 cwnd 的基本指导原则是:

* MAY increment cwnd by SMSS bytes * SHOULD increment cwnd per equation (2) once per RTT * MUST NOT increment cwnd by more than SMSS bytes We note that [RFC3465] allows for cwnd increases of more than SMSS bytes for incoming acknowledgments during slow start on an experimental basis; however, such behavior is not allowed as part of the standard.

我们注意到 [RFC3465] 允许在实验基础上,在慢启动期间针对传入的确认增加超过 SMSS 字节的 Cwnd;然而,这种行为不是作为标准的一部分。

The RECOMMENDED way to increase cwnd during congestion avoidance is to count the number of bytes that have been acknowledged by ACKs for new data. (A drawback of this implementation is that it requires maintaining an additional state variable.) When the number of bytes acknowledged reaches cwnd, then cwnd can be incremented by up to SMSS bytes. Note that during congestion avoidance, cwnd MUST NOT be increased by more than SMSS bytes per RTT. This method both allows TCPs to increase cwnd by one segment per RTT in the face of delayed ACKs and provides robustness against ACK Division attacks.

推荐在拥塞避免期间增加 cwnd 的方式是计算通过 ACK 为新数据确认的字节数。(这种实现的一个缺点是它需要维护一个额外的状态变量。)当确认的字节数达到 cwnd 时,可以将 cwnd 增加到多达 SMSS 字节。注意,在拥塞避免期间,cwnd 的增加在每个 RTT 不得超过 SMSS 字节。这种方法允许 TCP 在延迟 ACK 的情况下每 RTT 增加一个数据段,并对 ACK 分割攻击提供了鲁棒性。

Another common formula that a TCP MAY use to update cwnd during congestion avoidance is given in equation (3):

另一个常见的 TCP 可能在拥堵避免期间更新 cwnd 的方法如方程 (3) 所示:

cwnd += SMSS*SMSS/cwnd (3) This adjustment is executed on every incoming ACK that acknowledges new data. Equation (3) provides an acceptable approximation to the underlying principle of increasing cwnd by 1 full-sized segment per RTT. (Note that for a connection in which the receiver is acknowledging every-other packet, (3) is less aggressive than allowed -- roughly increasing cwnd every second RTT.)

这种调整在每个确认新数据的传入 ACK 上执行。方程 (3) 提供了每 RTT 增加一个完整大小数据段的基本原理的可接受近似。(请注意,对于接收方每隔一个包进行确认的连接,(3) 比允许的要保守——大约每第二个 RTT 增加 cwnd。)

Implementation Note: Since integer arithmetic is usually used in TCP implementations, the formula given in equation (3) can fail to increase cwnd when the congestion window is larger than SMSS*SMSS. If the above formula yields 0, the result SHOULD be rounded up to 1 byte. Implementation Note: Older implementations have an additional additive constant on the right-hand side of equation (3). This is incorrect and can actually lead to diminished performance [RFC2525].

**实现说明:由于 TCP 实现通常使用整数运算,(3) 方程中给出的公式当拥塞窗口大于 SMSS*SMSS 时可能无法增加 cwnd。如果上述公式产生 0,结果应四舍五入到 1 字节。实现说明:较旧的实现在方程 (3) 的右侧有一个额外的加法常量。这是不正确的,实际上可能会导致性能下降 [RFC2525]。**

Implementation Note: Some implementations maintain cwnd in units of bytes, while others in units of full-sized segments. The latter will find equation (3) difficult to use, and may prefer to use the counting approach discussed in the previous paragraph.

实现说明:有些实现以字节为单位维护 cwnd,而其他实现以完整大小的数据段为单位。后者会发现使用方程 (3) 困难,并且可能更倾向于使用前一段讨论的计数方法。

When a TCP sender detects segment loss using the retransmission timer and the given segment has not yet been resent by way of the retransmission timer, the value of ssthresh MUST be set to no more than the value given in equation (4):

当 TCP 发送方使用重传计时器检测到段丢失并且该段尚未通过重传计时器重新发送时,ssthresh 的值必须设置为不超过方程 (4) 中给出的值:

ssthresh = max (FlightSize / 2, 2*SMSS) (4) where, as discussed above, FlightSize is the amount of outstanding data in the network.

正如上面讨论的,FlightSize 是网络中未完成的数据量。

On the other hand, when a TCP sender detects segment loss using the retransmission timer and the given segment has already been retransmitted by way of the retransmission timer at least once, the value of ssthresh is held constant.

另一方面,当 TCP 发送方使用重传计时器检测到段丢失并且该段已经通过重传计时器至少重传一次时,ssthresh 的值保持不变。

文档中的SMSS就是之前说的协商MSS

简单来说,初始cwnd = IW,ssthresh没有限制(这里的IW是INITIAL WINDOW的缩写)

发送时每收到一个ack

在cwnd <= ssthresh时,执行慢启动,

cwnd = cwnd + min (N, MSS)(这里N 是接收的ACK中确认的之前未确认的字节数)否则执行拥塞避免,

cwnd = cwnd + MSS * MSS/ cwnd

在这个过程中,如果出现拥塞(超时或者收到重复ACK):

设置

sshthresh = max(FlightSize/2, 2 * MSS)(这里FlightSize指的是 TCP 已经发送的但是还未得到 ACK 的数据的字节数)如果拥塞是超时,那么设置

cwnd = min(IW, MSS)

大体思路还是没变,细节有所变化,祖传TCP中慢启动的指数增长保守到了线性增长,拥塞避免的线性增长保守到了每两个ack才线性增长一次

文档里面还有快速重传和快速恢复的叙述,和祖传TCP比起来也是微调

简单来说,快速重传+快速恢复收到第3个重复的ACK后:

sshthresh = max(FlightSize/2, 2 * MSS)cwnd = ssthresh + 3 * MSS

RFC文档和祖传TCP在慢启动的最大区别,在4.1. Restarting Idle Connections中有这样一段叙述

A known problem with the TCP congestion control algorithms described above is that they allow a potentially inappropriate burst of traffic to be transmitted after TCP has been idle for a relatively long period of time. After an idle period, TCP cannot use the ACK clock to strobe new segments into the network, as all the ACKs have drained from the network. Therefore, as specified above, TCP can potentially send a cwnd-size line-rate burst into the network after an idle period. In addition, changing network conditions may have rendered TCP's notion of the available end-to-end network capacity between two endpoints, as estimated by cwnd, inaccurate during the course of a long idle period.

上述TCP拥塞控制算法中存在一个已知问题,即在TCP空闲较长时间后可能会导致流量猛增。在空闲期后,TCP无法使用ACK时钟来控制新数据段进入网络,因为所有的ACK已经从网络中消失。因此,如上所述,在空闲期后,TCP可能会将相当于cwnd大小的流量突发发送到网络中。此外,长时间的空闲期间内网络条件的变化可能使TCP对两个端点之间可用的端到端网络容量的估计(由cwnd估计)变得不准确。

[Jac88] recommends that a TCP use slow start to restart transmission after a relatively long idle period. Slow start serves to restart the ACK clock, just as it does at the beginning of a transfer. This mechanism has been widely deployed in the following manner. When TCP has not received a segment for more than one retransmission timeout, cwnd is reduced to the value of the restart window (RW) before transmission begins.

[Jac88]推荐在较长时间的空闲后,TCP使用慢启动来重新开始传输。慢启动用于重新启动ACK时钟,就像在传输开始时一样。这个机制已经广泛部署。当TCP在超过一个重传超时时间内没有接收到数据段时,传输开始前cwnd将减少到重启窗口(RW)的值。

For the purposes of this standard, we define RW = min(IW,cwnd). Using the last time a segment was received to determine whether or not to decrease cwnd can fail to deflate cwnd in the common case of persistent HTTP connections [HTH98]. In this case, a Web server receives a request before transmitting data to the Web client. The reception of the request makes the test for an idle connection fail, and allows the TCP to begin transmission with a possibly inappropriately large cwnd.

为此标准的目的,我们定义 RW = min(IW, cwnd)。使用接收最后一个数据段的时间来决定是否减少cwnd可能在持久HTTP连接[HTH98]的常见情况下无法使cwnd缩小。在这种情况下,Web服务器在向Web客户端传输数据前会接收到一个请求。接收请求使得检测空闲连接的测试失败,并允许TCP以可能不适当的大cwnd开始传输。

Therefore, a TCP SHOULD set cwnd to no more than RW before beginning transmission if the TCP has not sent data in an interval exceeding the retransmission timeout.

因此,如果TCP在超过重传超时时间的间隔内未发送数据,那么在开始传输前,应将cwnd设置为不超过RW。

For the purposes of this standard, we define RW = min(IW,cwnd).

在本标准中,我们定义了RW=min(IW,cwnd)。

简单来说,在超过一个RTO的空闲时间后,cwnd = IW,重新开始慢启动!

小结

RFC2414 也有如下总结

TCP implementations use slow start in as many as three different ways:

to start a new connection (the initial window);

to restart a transmission after a long idle period (the restart window);

to restart after a retransmit timeout (the loss window).

慢启动会出现在三个情况下:

- 连接刚刚建立的时候

- 连接在 idle 一段时间之后,重新启动的时候

- 发生超时的时候

Restarting Idle Connections对稀疏链接的影响

看到Restarting Idle Connections的内容我就汗流浃背了

我司的linux内核选项默认打开了这个

1 | ~ sysctl -a|grep slow_start |

我立刻对之前优化的http服务进行了抓包,查看是否有稀疏请求导致的慢启动,我连着看了数十个包都没看到这个问题,大盘数据也显示真的完全解决了,可以高枕无忧了

这就有点奇怪了,只好掏出Systemtap来对内核进行debug了

22.04 Systemtap开发环境搭建

我的内核版本

1 | ~ uname -r |

安装systemtap依赖的内核源码

更新GPG key

1

~ sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys C8CAB6595FDFF622

安装

1

2

3

4

5

6

7

8

9~ sudo codename=$(lsb_release -c | awk '{print $2}')

sudo tee /etc/apt/sources.list.d/ddebs.list << EOF

deb http://ddebs.ubuntu.com/ ${codename} main restricted universe multiverse

deb http://ddebs.ubuntu.com/ ${codename}-security main restricted universe multiverse

deb http://ddebs.ubuntu.com/ ${codename}-updates main restricted universe multiverse

deb http://ddebs.ubuntu.com/ ${codename}-proposed main restricted universe multiverse

EOF

~ sudo apt-get update

~ sudo apt-get install linux-image-$(uname -r)-dbgsym*

安装systemtap

ubuntu 22.04自带的systemtap版本太老了,无法在6.5.0的内核下运行

只能自己编译systemtap,万幸这里有个ubuntu团队现成编译好的systemtap

1

2

3~ add-apt-repository ppa:ubuntu-support-team/systemtap

~ apt update

~ apt-get install systemtap验证systemtap环境

1

2

3

4

5

6

7

8~ stap -ve 'probe begin { log("hello world") exit() }'

Pass 1: parsed user script and 484 library scripts using 146064virt/118272res/11904shr/105620data kb, in 140usr/7700sys/7924real ms.

Pass 2: analyzed script: 1 probe, 2 functions, 0 embeds, 0 globals using 147648virt/120192res/12288shr/107204data kb, in 30usr/140sys/170real ms.

Pass 3: using cached /root/.systemtap/cache/d6/stap_d688e4c93334e9681f5b55b5897fac35_1108.c

Pass 4: using cached /root/.systemtap/cache/d6/stap_d688e4c93334e9681f5b55b5897fac35_1108.ko

Pass 5: starting run.

hello world

Pass 5: run completed in 40usr/920sys/2340real ms.

内核埋点

write会调用tcp_sendmsg这个系统调用,通过tcp_sendmsg->tcp_sendmsg_locked->tcp_skb_entail->tcp_slow_start_after_idle_check

在将需要发送的数据加入skb队列时候,进行了Restarting Idle Connections校验

1 | static inline void tcp_slow_start_after_idle_check(struct sock *sk) |

埋点的关键在于检查为什么没有调用tcp_cwnd_restart,可惜tcp_slow_start_after_idle_check是一个内联函数,没法直接埋点,因此只能打印这个函数涉及到的全部变量来进行debug了

1 | %{ |

然后需要搞一个简单的客户端和服务端进行验证,逻辑上客户端每10s发送一次包即可,服务端nc -l 12345就行,客户端如下:

1 | import socket |

执行客户端

1 | ~ python3 tcpsend.py x.x.x.x 12345 |

执行内核埋点脚本

1 | ~ stap -g monitor_cwnd.stp -B CONFIG_MODVERSIONS=y |

重点在于每10秒的第一行,可以发现cwnd初始为10,第2个10秒上涨到160,第3个10秒缩到4

这里缩到4是因为tcp_slow_start_after_idle选项么?我关闭了以后再进行测试

1 | ~ echo "net.ipv4.tcp_slow_start_after_idle=0" | sudo tee -a /etc/sysctl.conf |

打印显示:

1 | 1718794057 @ port:54978, cwnd:186, tcp_slow_start_after_idle:0, packets_out:28, cong_control:1, delta:0, RTO:51, lsndtime:41141433 |

可以发现tcp_slow_start_after_idle的开关,对每隔10秒的cwnd没有任何影响

对照tcp_slow_start_after_idle_check的实现可以发现,这里cong_control不为NULL,所以永远不会执行tcp_cwnd_restart

1 | //忽略掉了一些细节 |

查看了一下当前的拥塞控制算法

1 | ~ sysctl -a|grep congestion_control |

bbr的拥塞控制函数指针初始化代码在这里,这就对的上了,bbr实现了自己的cong_control函数,因此不会符合RFC标准(也对,bbr有一个自己的慢启动阶段)

1 | static struct tcp_congestion_ops tcp_bbr_cong_ops __read_mostly = { |

再看一下cubic

1 | static struct tcp_congestion_ops cubictcp __read_mostly = { |

cubic没有实现自己的cong_control函数,那么改成cubic算法再来测试一下看看,是否能符合RFC标准

1 | ~ echo "net.ipv4.tcp_congestion_control=bbr" | sudo tee -a /etc/sysctl.conf |

再次重新开启客户端进行测试

1 | ~ stap -g monitor_cwnd.stp -B CONFIG_MODVERSIONS=y |

可以发现,在cong_control为0后,每隔10秒再发送数据,cwnd会重置成10,和上文提到的RFC6928标准中的初始化窗口大小一致

打印的变量对照源码可以发现,以每隔10秒的第一次调用为例,一定会执行tcp_cwnd_restart,符合预期

1 | static inline void tcp_slow_start_after_idle_check(struct sock *sk) |

总结

本文通过分析一个由于服务扩容导致的耗时上升问题,

是因为扩容导致链接变的非常稀疏,触发了客户端或者服务端的http长链接空闲超时时间,那么每次请求走短连接,要多三次握手一个rtt,以及慢启动的多个rtt耗时

随后深入分析了慢启动的历史和当前标准,慢启动会出现在三个情况下:

- 连接刚刚建立的时候

- 连接在 idle 一段时间之后,重新启动的时候

- 发生超时的时候

发现了公司的内核参数设置tcp_slow_start_after_idle在第2种情况下可能踩坑

通过在内核埋点,验证了内核在bbr开启后不会被影响,稀疏请求导致的高耗时问题已经完整解决

参考资料

TCP slow start, is it good or not?

RFC 5681: TCP Congestion Control

BBR

Congestion Window Validation(CWV)

Systemstap

执行stap测试例报错:“insmod: can‘t insert ‘xx.ko‘: invalid module format”